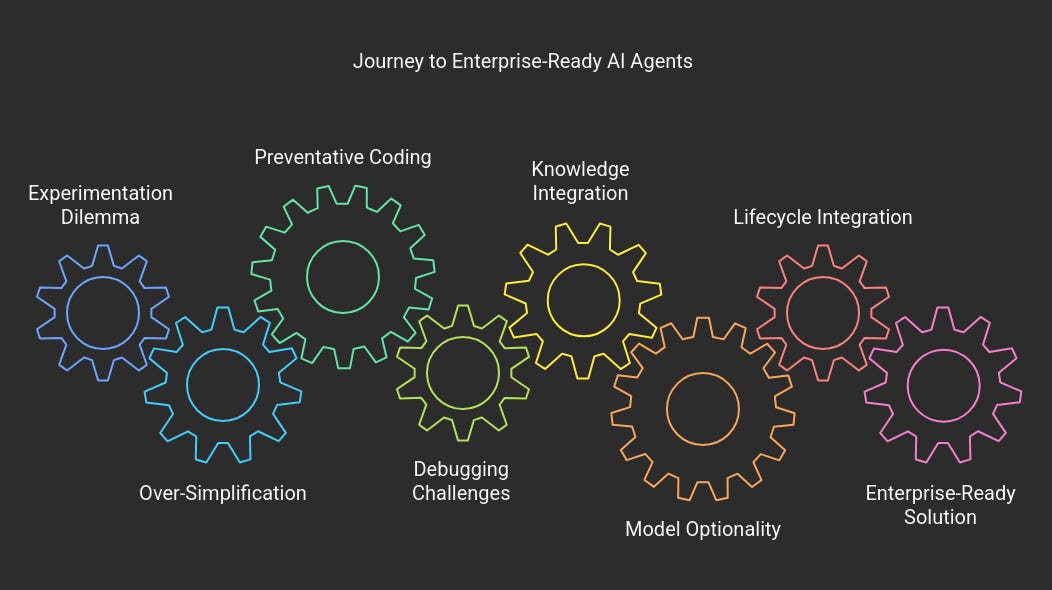

In the rapidly evolving world of AI, agent frameworks have emerged as a hot topic. Yet, amid the buzz and bold promises, a critical examination reveals that much of the current excitement might be more hype than substance. Let’s dive deep into the debate, exploring both the potential and the pitfalls of agent frameworks.

Beyond the Proof of Concept: The Experimentation Dilemma

AI Agent frameworks are often lauded as a starting point for many learning to build Agentic AI applications. In labs and early-stage projects, these frameworks offer a playground for developers to test ideas, prototype applications, and explore new interactions between autonomous agents. However, there’s a critical catch: many of these frameworks rarely enable those experiements to move beyond the proof-of-concept stage.

Why does this happen? The market appears saturated with projects/examples designed more to generate buzz, garner likes, or secure service contracts than to deliver robust, performance-tested products.

The rush to monetize AI agent frameworks/platforms has resulted in solutions that often overpromise to replace human effort entirely but falter when faced with real-world demands. The gap between initial innovation and mature, scalable products remains significant.

Over-Simplification and Over-Promise

A recurring theme in the current landscape is the tendency to oversimplify complex challenges.

Marketers and developers sometimes tout agent frameworks as turnkey solutions for sophisticated problems, glossing over the intricate realities involved in deploying and maintaining such systems.

The allure of a one-size-fits-all agent that can seamlessly handle diverse tasks is strong, but the reality is that successful AI integration often requires a nuanced approach.

Over-promising capabilities not only misleads potential users but also sets unrealistic expectations, leading to disillusionment when these systems fail to deliver under practical conditions.

The Hidden Work: Preventative Coding and Evaluation

Behind the scenes, the development of AI agents involves extensive "preventative coding"—a term that encapsulates the proactive efforts to anticipate potential pitfalls and ensure system robustness. This work, often underappreciated in marketing materials, is vital for creating reliable AI systems.

Developers must implement rigorous evaluation and testing routines to avoid failures that could have significant repercussions in production environments. The heavy emphasis on preventive measures and continual evaluation is a reminder that AI agents are not magic bullets but complex software systems that require ongoing maintenance and refinement.

Challenges in Debugging and Troubleshooting

For enterprise applications, debugging and troubleshooting are non-negotiable features. Yet, many agent frameworks fall short in this critical area. The tools available for enterprise-grade debugging are often either not mature enough or are not easily accessible, leaving developers to grapple with opaque error messages and insufficient support.

This shortfall can be especially problematic in high-stakes environments where downtime or errors can translate into significant losses. The lack of robust debugging tools highlights a broader issue: while agent frameworks offer a promising vision of automation, they are still evolving into the kind of dependable systems that enterprises demand.

Integrating the Knowledge Layer, Tools, and Memory

A fully functional agent framework isn’t just about the agent itself—it’s about the ecosystem that supports it.

Key components include:

Knowledge Layer: This is the repository of domain-specific expertise that the agent needs to operate effectively.

Tools Integration: Seamlessly interfacing with various software tools and APIs is essential for a smooth operational flow.

Memory Management: Effective management of session state and long-term memory ensures that the agent can learn from past interactions and adapt over time.

Each of these elements is a piece of a larger puzzle. Neglecting any one area can result in a system that looks impressive on paper but falters in practice.

Model Optionality: Flexibility, Data Privacy, and Future-Proofing

One of the most critical—but often overlooked—aspects of robust agent frameworks is model optionality. In a landscape crowded with rapidly advancing AI models, having the flexibility to choose from a range of underlying models is essential. Model optionality offers several key benefits:

Flexibility in Performance and Cost: Different models excel at different tasks. By integrating a variety of models—from lightweight, cost-effective options to more powerful, resource-intensive ones—organizations can optimize for both performance and budget.

Mitigation of Vendor Lock-In: Relying on a single proprietary model can expose an organization to risks associated with pricing changes, performance issues, or shifts in vendor strategy. Model optionality ensures that you’re not tied down, allowing for easy switching as new, improved models become available.

Adaptability to Use Cases: As your needs evolve—whether it’s for natural language understanding, multi-modal reasoning, or specialized domain tasks—the ability to plug in the most appropriate model becomes a decisive advantage.

Enhanced Data Privacy: With data privacy a top priority for many enterprises, model optionality enables organizations to choose models that offer robust data handling and privacy controls. By having the option to deploy models on-premises or use open-source alternatives, businesses can ensure that sensitive data remains under their control and is not inadvertently exposed through third-party cloud services.

Future-Proofing Your Investment: The AI ecosystem is in constant flux, with new models and techniques emerging regularly. Frameworks that support model optionality are inherently more future-proof, ensuring long-term scalability and adaptability.

By enabling developers to seamlessly integrate and experiment with different models, agent frameworks that prioritize model optionality offer a competitive edge that moves beyond the hype of a single “miracle” model.

Complete Agent Lifecycle Integration key to fast iteration

Mature AI systems are built over multiple iterations. Fast iteration cycles are crucial, but they depend on a well-orchestrated lifecycle that includes:

Deployment Monitoring: Keeping an eye on how agents perform in live environments is essential for identifying and addressing issues swiftly.

Continual Updates: Regular updates help agents adapt to new challenges, learn from previous mistakes, and improve overall performance.

Orchestration: Coordinating the various components—from the knowledge layer to memory and tools integration—ensures that the agent operates as a cohesive unit.

It is only through this iterative process that agent products can mature into reliable solutions. Fast iteration isn’t just about speed; it’s about having the right infrastructure in place to support continuous improvement.

Conclusion: Hype vs. Reality

Agent frameworks have undoubtedly sparked excitement and innovation, but the current state of the options is a mixed bag. While they serve as excellent experimentation tools and starting points, many remain confined to the realm of proof-of-concept and need a lot more journey to be enterprise ready solution. The journey from a promising prototype to an enterprise-ready solution is fraught with challenges—oversimplification, over-promising, extensive preventative coding, inadequate debugging tools, and the need for a fully integrated life-cycle management that includes robust model optionality, evaluation, deployment and post deployment monitoring is key to avoiding frustration.

For AI agents to truly revolutionize industries, the market must shift its focus from hype to substance. Embracing the complexity of these systems, investing in robust development practices, and acknowledging that maturation takes time will be essential. Only then can we move beyond the allure of quick fixes and towards a future where AI agents deliver on their bold promises.