Moving from Model Learning to Agent Learning in 2025

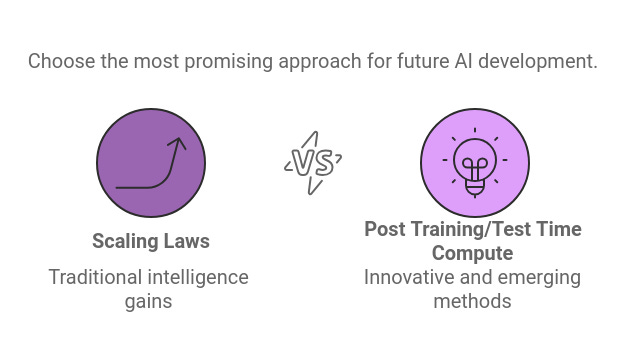

Scaling Laws have Ended, Test Time Compute is the current paradigm, Where are we headed?

Moving from Model Learning to Agent Learning in 2025.

1. Scaling Laws driven intelligence gains has ended (atleast for now)

With Ilya sutskever and OpenAI both agreeing that we are beyond scaling laws, We now have almost a concensus that we have hit the limit on pretraining compute.

i) Most new models below 140B parameters

This was also apparent to some of us watching the behavior of model announcements post GPT-4 announcement in May, 2024. We didn't see any model that crossed GPT-4. OpenAI itself most models rolled out were smaller than GPT-4 post May. While there was a news around GPT-4.5/5 in the air, it never materialized instead we saw GPT-4o, GPT-4o-mini and now o1-preview/mini/pro

Msft and other frontier model companies followed similar suite and announced models largely smaller than 120B parameters.

ii) Data Wall

News around data wall also started hitting around May/June and that synthetic data is the way forward was a concensus theme, however, as we know that helped open models and smaller models much more than the frontier models in net improvement in demonstrated "intelligence"

2. Post Training and Test Time Compute

In last few months, we started toying with ideas, which could help these models extend the scaling laws on other dimensions, quality of post training included CoT. The term "test time compute" started doing the rounds - moot idea behind OpenAI o1 series model as well as DeepSeek R1 and possibly many more.

The idea here is that you create multiple solutions for a given problem during test/inference round and then you use some sort of verifier and iterate over the solution space. While this did bring in the next gain in models answering better, they were still limited by the search space, and the number of ways we could scale up the verifiers.

So while the solution works, the scaling oppurtunity here is limited by the input search space as well as the verifiers.

As of writing this we still donot have o1 with function calling interface, clearly knowing that the reasoning on search space instrinic to the model vs bringing in external uncertainties just expands the reasoning problem into a massive challenge.

Both of the above experiences/acheivements have been extraordinary and sets the path forward, something that won't have been possible without going through the same.

So, where do we go in 2025? Here's where I beleive we are headed.

1. We will move from a central model learning paradigm to Agent Learning. There are many advantages to decentralizing the test time compute problem.

2. It helps us persue the path where scope of environmental nitty gritties are focused more narrowly to the use case at hand.

3. With narrower scope, defined by the Agentic bound, New learning value is deeper, contextual. For example, A smaller agentic team working on a particular problem can generate a better post-training datasets for the specific role/task needs as well as environmental need.